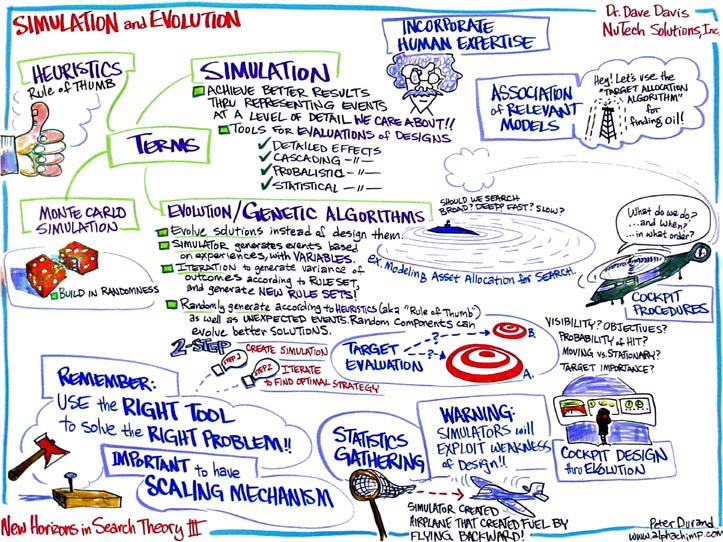

Simulation

and Evolution Work Well Together

David

Davis, NuTech Solutions, Inc.

One

theme in this discussion is that simulation is a way to evaluate

strategy, but evolution is a good way to get good strategy.

Click

image for enlargement

Terminology

What

do we do when we simulate something? Simulations involve reproducing

events at the level of detail we care about. This can be done at a fine

level of detail (agent-based modeling) as well as at a higher level. Often

the outcomes are unexpected.

A

simulation is a fixed space that doesn't change and we try to find a good

strategy within this space. Simulations are tools for evaluating our strategy.

They are interactions that are hard to capture.

Why

Evaluate with Simulations?

Simulations

represent interactions that we can't capture in other ways. They help

us us to see what design principles are necessary, and simulate a blend

of engagements.

What

are Evolutionary Algorithms?

Genetic

algorithms simulate evolution on the computer. We "evolve" solutions

to hard problems instead of trying to figure them out. They are good where

mathematical techniques can not be applied. Evolutionary Algorithms are

good when we need a reasonable answer fairly quickly or when used to find

rule sets or strategies that do well under simulation.

Case

Study: Investigating Contacts

The

scenario is that you have unidentified contacts in the ocean. Once you

have contact, you want to allocate assets to investigate it. Success in

this scenario means that you have determined what the source of contact

was, and you are continuing to monitor if it is of interest.

Some

of the problems in the scenario are that the area of the contact could

increase in size with time, different assets may work well together or

may hamper each other and we need to be able to investigate other contacts

if they occur. In the last case, you have to be careful how you allocate

your resources. If you make one contact that is located far away and send

a slow asset to investigate, then you unintentionally expand your search

area.

In

the simulation, we can model the arrival of contacts probabilistically.

When the contacts occur, we can modify the probabilities of other contacts.

When we learn about the contacts, this modifies our view of the probabilities.

Some contacts don't represent anything interesting while others are extremely

interesting.

The

simulator generates events with probabilities based on our experience.

It includes algorithms for computing success rates at finding event sources.

It includes algorithms for changing the size of the search area with time.

The simulator measurements of success are sensitive to weather, day/night,

season, asset combinations, and type of source, etc.

The

simulator works only as well as our rules sets. Some rule sets start with

randomly-generated rules, or rules that represent human heuristics. Through

the simulator you can evolve better and better rule sets. You can simulate

months or years of activity to evaluate a rule set. Using the desired

features of the problem you can decide which are the good rule sets and

which are the bad ones. More rule sets can be created, but let the good

ones proliferate more than the bad ones. Rule sets can also mutate and

cross-breed.

Case

Study: Target Allocation

Suppose

you have a force faced with a group of approaching unfriendly objects.

How should you allocate fire in order to achieve your goals? Early decisions

can influence later ones. Important targets should receive more attention

than less important targets. Some interactions between weapon types are

important.

How

do we evaluate a Target Allocation Strategy? Important targets have a

high probability of being eliminated while elimination of our force members

is a low probability. We want to minimize the duration of interaction,

expenditure of our ammunition and loss of our crew.

This

problem can be handled just like investigating contacts, except that the

contacts are all considered at the same time. A simulation of the interaction

is a good way to evaluate a blend of weaponry and a targeting strategy.

An evolutionary algorithm can be used to find good target allocation rule

sets.

There

are different rule sets for different types of engagements. An

example is targets as aircraft, boats, mixed types, and targets could

be far away and of unknown types. Other types of engagement involve motion

and we have time constraints.

Below

are some example of Rules for Target Allocation:

-

Target the incoming object with the highest combination of importance

and residual hit probability (low visibility)

-

Switch

targets when probability of the kill of the current target is greater

than 96%

-

Target

the guns with the highest probability of kills first

This

simulation also requires you to evolve good rule sets. Evolve a high-performance

rule set by putting each candidate through a very large number of simulated

engagements of the expected types, weighted by probability. Evolve rule

sets for different types of engagements by starting a different evolutionary

process for each type, and creating rule sets that function well for that

type of engagement. Evolve different rule sets depending on the objectives:

high survivability, high kill rate, deterrence, interdiction, etc.

Case

Study: NASA in-cockpit Procedures Studies

This

simulation is for the A3I project (Army-NASA Aircrew Aircraft Integration).

Also called MIDAS. In this simulation, we simulated the effects of required

procedures on cockpit crews (commercial aircraft and Apache helicopter

crews). For the commercial crews, we simulated cockpit information systems

and their effect in normal and emergency situations. For the helicopter

crews, we simulated the effectiveness of mission procedures.

An

example of a simulator event for this case study would be that there is

a truck convoy ahead of us. We assign two helicopters to locate and deliver

a missile strike. We use pop-up and jinxing procedures to do reconnaissance

and evasion of ground-to-air missiles. One pilot locates the target for

the other. Then we model radio procedures, cognitive procedures, and situational

awareness. The simulation is critical in assessing the impact of different

equipment and mission strategies.

We

can use evolution simulations to measure pilot effectiveness through hundreds

of thousands of mission simulations to find the best strategies. As well

as evolve cockpit displays to find those that give the highest levels

of performance across hundreds of thousands of mission simulations.

In

one simulation, we were tasked with simulating a plane that could go fast

and stay up in the air a long time. It is very difficult for a plane to

do both of these things. We gave the simulator some parameters like the

size engine, width of cockpit, etc. Then we ran some simulations to see

how fast the plane could go and how long it could stay up. The simulator

design was 30% better. The only problem was that the in the simulation

the plane flew backward–and generated fuel as it flew! There is a

caveat in simulations. You may spend time debugging what you think

you could not try, however the simulator will always try the most outlandish

solutions in order to succeed.

Case

Study: Interpreting Data

We

get LOFARgrams from a listening apparatus. Can we get a computer to simulate

what human sonar people do? Humans can analyze the source of contacts

based on experience. They can tell what contacts may be whales or fishing

boats. Human experts can interpret the signals with high accuracy. Humans

tend to be best in the region and conditions in which they were trained.

Pacific, no storms, no whales in background, etc. Humans are excellent

at pattern recognition. Can a computer do what the human eye can do at

a glance?

The

task of this scenario was to produce an expert system that can do what

the humans do. A big difficulty was identifying visual patterns that humans

see easily ("lines in the data). The expert system techniques did

not produce good results at line-tracing.

The

development team used a genetic algorithm. They used hundreds of LOFARgrams

marked by humans so that the interesting lines were identified. The genetic

algorithm evolved rule sets for interpreting the data. A rule was evaluated

based on how well it matched the human analysis. Over time, the system

learned to perform this as well as humans. By changing the training cases,

the system could learn to do this in different locations, conditions,

and types of background noise.

Conclusions

Simulations

can be more accurate and informative than high-level or mathematical models

of an event. Probabilistic simulations show us what can happen under a

wide variety of conditions. Many interesting problems can be solved very

well if we simulate, evaluate, and evolve.

Questions:

What

if you leave something out and it completely destroys you? Genetic

algorithms always include human heuristics. If something destroys you,

add it to the algorithm. It is always good if genetic algorithms start

with some good solutions. It needs a good place to start and then it can

evolve from there to be a better solution.

How

do you account for humans in the system? Humans

have varying levels of competence, how do you include that in your Genetic

Algorithms? We want a system to work between the good and bad human responses.

We incorporate experiences and performances from many people into the

simulation. We get as

much human expertise as we can to help us understand the cognitive part

of the simulation.

|